Setup a camera configuration for processing a single video#

To process a video, a camera configuration is needed. The camera configuration makes the processing aware how to project the movie’s frames to an orthorectified plane with real-world distances, and a user defined area of interest and processing resolution. It also tells the processing what the water level during the survey video is, so that the depth can be estimated, once bathymetry cross-sections are added. The process for a fixed video setup that takes videos with changing water level conditions is slightly more advanced. Therefore we here start with the assumption that you walk to a stream with a smart phone and a GPS (RTK) device or a spirit level instrument, record control points and record a short video for just one single observation.

In this notebook, we will extract one frame from the survey video to grab the control points. For this example, field observations were collected at the Ngwerere River, in Lusaka. We will first setup an empty camera configuration, and then gradually fill this with the required information. Along the way we plot what we have in a geospatial plot. The information we add is:

Ground-control points (row and columns locations in the frame as well as real world coordinates)

Row and column coordinates that define the area of interest.

The water level during the video and survey (set at zero, because this survey was only done for one single video, this is only relevant if multiple videos with different water levels are processed)

The position of the camera lens. This is relevant in case multiple videos with different water levels are processed. We here add this, but it is not actually used.

[1]:

import xarray as xr

import pyorc

import cartopy

import cartopy.crs as ccrs

import matplotlib.pyplot as plt

Open movie and plot the first frame#

We use the pyorc Video class to open a video file and extract frame number #0 (remember, python starts counting at zero instead of one). Several markers have been placed, some as square shaped checkerboard patterns, others spraypainted with black paint on a rock. All markers are more or less at the water level. If you want to interactively view coordinates you can add %matplotlib notebook to the top of the cell. You can then hover over the image with your mouse and see the coordinates in the

bottom-right. velocimetry is normally done on one image channel (greyscale), but we first explicitly use method="rgb" to extract one frame in rgb colorspace for finding the points.

[2]:

# uncomment line below if you want to view coordinates interactively

#%matplotlib notebook

video_file = "ngwerere/ngwerere_20191103.mp4"

video = pyorc.Video(video_file, start_frame=0, end_frame=1) # we only need one frame

frame = video.get_frame(0, method="rgb")

# plot frame on a notebook-style window

f = plt.figure(figsize=(10, 6))

plt.imshow(frame)

Scanning video: 100%|██████████| 2/2 [00:00<00:00, 17.93it/s]

[2]:

<matplotlib.image.AxesImage at 0x7fb568755ee0>

You can identify different marker x (column) and y (row) positions in the camera’s objective. Below, we have put several of these into a “src” part of the required gcp dictionary. Then we plot the frame and coordinates together.

[3]:

%matplotlib inline

gcps = dict(

src=[

[1421, 1001],

[1251, 460],

[421, 432],

[470, 607]

]

)

f = plt.figure(figsize=(16, 9))

plt.imshow(frame)

plt.plot(*zip(*gcps["src"]), "rx", markersize=20, label="Control points")

plt.legend()

[3]:

<matplotlib.legend.Legend at 0x7fb564249940>

Now we add the rest of the information:

the real world coordinates of the GCPs. These were measured using an RTK GPS unit in the Universe Transverse Mercator (UTM) 35S coordinate reference system (EPSG code 32735). We add these to the GCPs using another key called “dst”.

the water level during the survey as measured in the EPSG 32735 projection (

z_0), which is measured by the RTK GPS unit. This is used to later compute depths from a bathymetry section, measured in the same EPSG 32735 ellipsoid vertical reference.the coordinate reference system (

crs). The camera configuration then understands that everything we do is in UTM 35S. Really nice, because it makes our results geographically aware and geographical plots can be made. We add the crs to the camera configuration while setting it up.

Note that in case you have a fixed camera that regularly takes movies at different water levels, you also would need to set the following:

the locally measured water level

h_refduring your survey. Typically this comes from a staff gauge, that a local person reads out or a pressure gauge. For each video, a new water level must then be provided, which is used to relocate the ground control points to the right location for the new water level, and to estimate the depth over cross-sections, applied later in the process. Since we here process a single video, we don’t have to worry about this.

[4]:

# first add our UTM 35S coordinates. This MUST be in precisely the same order as the src coordinates.

gcps["dst"] = [

[642735.8076, 8304292.1190], # lowest right coordinate

[642737.5823, 8304295.593], # highest right coordinate

[642732.7864, 8304298.4250], # highest left coordinate

[642732.6705, 8304296.8580] # highest right coordinate

]

# # if we would use this video as survey in video, the lines below are also needed,

# # and proper values need to be filled in. They are now commented out.

# gcps["h_ref"] = <your locally measured water level during survey in>

gcps["z_0"] = 1182.2

# set the height and width

height, width = frame.shape[0:2]

# now we use everything to make a camera configuration

cam_config = pyorc.CameraConfig(height=height, width=width, gcps=gcps, crs=32735)

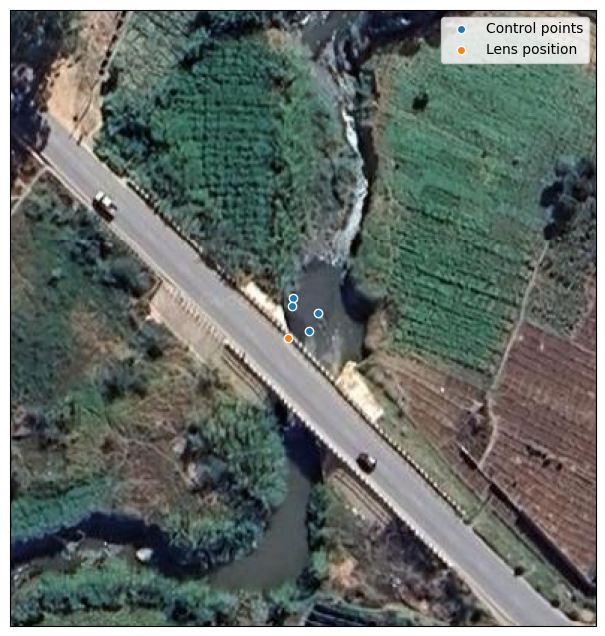

Below we make a quick plot. Cartopy is used to make the plot geographically aware. We use GoogleTiles, using the satellite style, to get some awareness of the surroundings.

[5]:

ax = cam_config.plot(tiles="GoogleTiles", tiles_kwargs={"style": "satellite"})

Finally we add information to define our area of interest, and how the camera objective must be reprojected and the resolution of the velocimetry.

For the area of interest, 4 coordinates must be selected in the camera perspective. A geographically rectangular box will be shaped around those to make a suitable area of interest. We can simply use pixel (column row) xy coordinates for this, so we can select them using the original frame. Below, 4 points are selected and shown in the camera objective.

a target resolution (in meters) must be selected. The resolution is used to reproject the camera objective to a planar projection with real-world coordinates.

a window size (in number of pixels) is needed. Velocimetry will be performed in windows of this size. Since the stream is quite small, we use 1 centimeter (0.01 m) and a 25 pixel (so 25 centimeter) window size, used to find patterns on the water to trace.

[6]:

corners = [

[292, 817],

[50, 166],

[1200, 236],

[1600, 834]

]

cam_config.set_bbox_from_corners(corners)

cam_config.resolution = 0.01

cam_config.window_size = 25

[7]:

f = plt.figure(figsize=(10, 6))

plt.imshow(frame)

plt.plot(*zip(*gcps["src"]), "rx", markersize=20, label="Control points")

plt.plot(*zip(*corners), "co", label="Corners of AOI")

plt.legend()

[7]:

<matplotlib.legend.Legend at 0x7fb564143440>

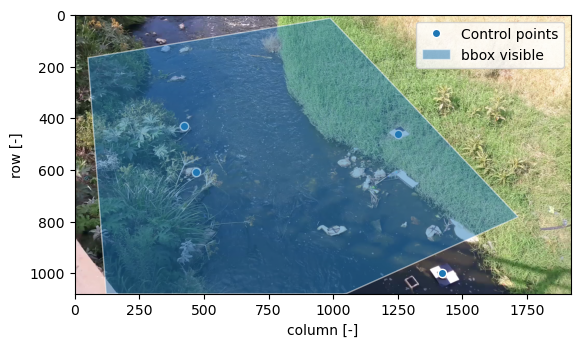

Now that all information is entered, we show the final camera configuration as a plot, both in geographical projection and in camera perspective. The rectangular box can be clearly seen now.

[8]:

%matplotlib inline

ax1 = cam_config.plot(tiles="GoogleTiles", tiles_kwargs={"style": "satellite"})

f = plt.figure()

ax2 = plt.axes()

ax2.imshow(frame)

cam_config.plot(ax=ax2, camera=True)

plt.savefig("ngwerere_camconfig.jpg", bbox_inches="tight", dpi=72)

/tmp/ipykernel_3242/608833754.py:8: DeprecationWarning: The camera=True option is deprecated, use mode='camera' instead. This option will be removed in a future release.

cam_config.plot(ax=ax2, camera=True)

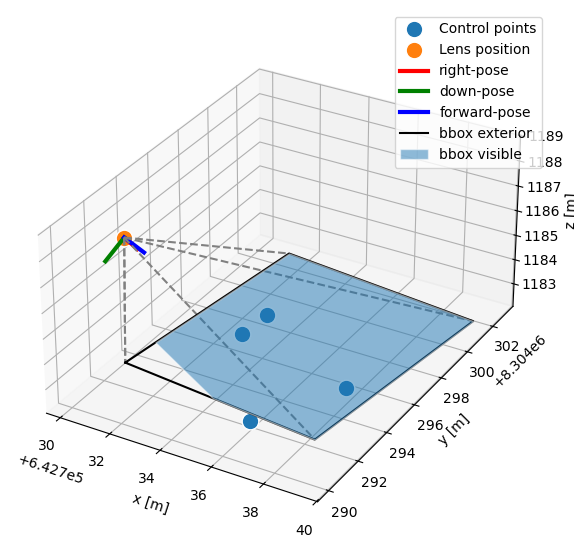

To better grasp the perspective and the situation, a 3D plot may be very useful. The camera configuration has a powerful 3D plotting method.

[9]:

# for interactive plotting, please install ipython matplotlib e.g. through `pip install ipympl`. You may need additional requirements, see https://matplotlib.org/ipympl/installing.html

# then uncomment the line below.

%matplotlib inline

plt.close("all")

f = plt.figure(figsize=(12, 7))

ax = f.add_subplot(projection="3d")

cam_config.plot(mode="3d", ax=ax)

ax.set_aspect("equal")

Our camera configuration is ready. Below we still show a string representation and then we store the configuration to a file for use in our next notebook using the .to_file method.

[10]:

print(cam_config)

cam_config.to_file("ngwerere.json")

{

"height": 1080,

"width": 1920,

"crs": "PROJCRS[\"WGS 84 / UTM zone 35S\",BASEGEOGCRS[\"WGS 84\",ENSEMBLE[\"World Geodetic System 1984 ensemble\",MEMBER[\"World Geodetic System 1984 (Transit)\"],MEMBER[\"World Geodetic System 1984 (G730)\"],MEMBER[\"World Geodetic System 1984 (G873)\"],MEMBER[\"World Geodetic System 1984 (G1150)\"],MEMBER[\"World Geodetic System 1984 (G1674)\"],MEMBER[\"World Geodetic System 1984 (G1762)\"],MEMBER[\"World Geodetic System 1984 (G2139)\"],MEMBER[\"World Geodetic System 1984 (G2296)\"],ELLIPSOID[\"WGS 84\",6378137,298.257223563,LENGTHUNIT[\"metre\",1]],ENSEMBLEACCURACY[2.0]],PRIMEM[\"Greenwich\",0,ANGLEUNIT[\"degree\",0.0174532925199433]],ID[\"EPSG\",4326]],CONVERSION[\"UTM zone 35S\",METHOD[\"Transverse Mercator\",ID[\"EPSG\",9807]],PARAMETER[\"Latitude of natural origin\",0,ANGLEUNIT[\"degree\",0.0174532925199433],ID[\"EPSG\",8801]],PARAMETER[\"Longitude of natural origin\",27,ANGLEUNIT[\"degree\",0.0174532925199433],ID[\"EPSG\",8802]],PARAMETER[\"Scale factor at natural origin\",0.9996,SCALEUNIT[\"unity\",1],ID[\"EPSG\",8805]],PARAMETER[\"False easting\",500000,LENGTHUNIT[\"metre\",1],ID[\"EPSG\",8806]],PARAMETER[\"False northing\",10000000,LENGTHUNIT[\"metre\",1],ID[\"EPSG\",8807]]],CS[Cartesian,2],AXIS[\"(E)\",east,ORDER[1],LENGTHUNIT[\"metre\",1]],AXIS[\"(N)\",north,ORDER[2],LENGTHUNIT[\"metre\",1]],USAGE[SCOPE[\"Navigation and medium accuracy spatial referencing.\"],AREA[\"Between 24\u00b0E and 30\u00b0E, southern hemisphere between 80\u00b0S and equator, onshore and offshore. Botswana. Burundi. Democratic Republic of the Congo (Zaire). Rwanda. South Africa. Tanzania. Uganda. Zambia. Zimbabwe.\"],BBOX[-80,24,0,30]],ID[\"EPSG\",32735]]",

"resolution": 0.01,

"lens_position": null,

"gcps": {

"src": [

[

1421,

1001

],

[

1251,

460

],

[

421,

432

],

[

470,

607

]

],

"dst": [

[

642735.8076,

8304292.119

],

[

642737.5823,

8304295.593

],

[

642732.7864,

8304298.425

],

[

642732.6705,

8304296.858

]

],

"h_ref": 0.0,

"z_0": 1182.2

},

"window_size": 25,

"is_nadir": false,

"camera_matrix": [

[

1429.458251953125,

0.0,

960.0

],

[

0.0,

1429.458251953125,

540.0

],

[

0.0,

0.0,

1.0

]

],

"dist_coeffs": [

[

0.0

],

[

0.0

],

[

0.0

],

[

0.0

],

[

0.0

]

],

"rvec": [

2.3297358530860364,

-0.628441693824629,

0.33425847614047505

],

"tvec": [

3801211.1772794453,

5571248.865910536,

-4887364.457531794

],

"bbox": "POLYGON ((642730.1121582435 8304293.1741271, 642731.2191027412 8304302.71009448, 642739.3644082109 8304301.764579387, 642738.2574637132 8304292.228612008, 642730.1121582435 8304293.1741271))"

}